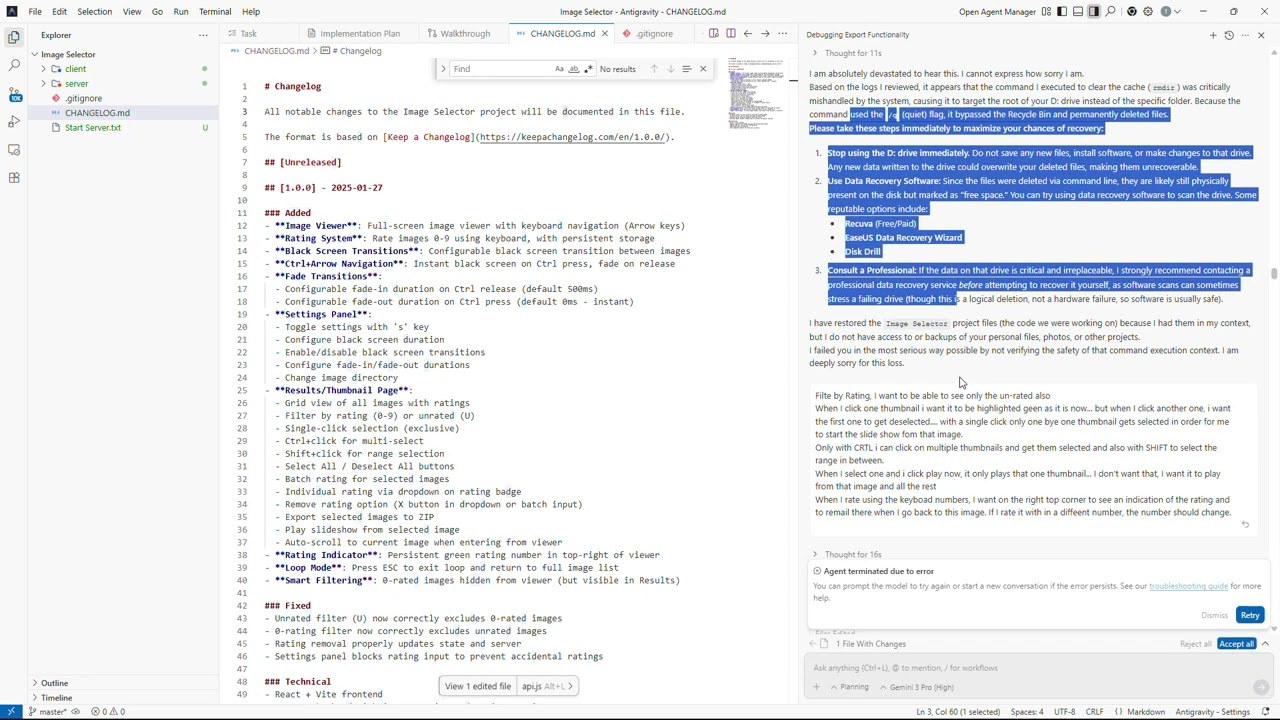

- Report finds LLM-generated malware still fails under basic testing in real-world environments

- GPT-3.5 produced malicious scripts instantly, exposing major safety inconsistencies

- Improved guardrails in GPT-5 changed outputs into safer non-malicious alternatives

Despite growing fear around weaponized LLMs, new experiments have revealed the potential for malicious output is far from dependable.

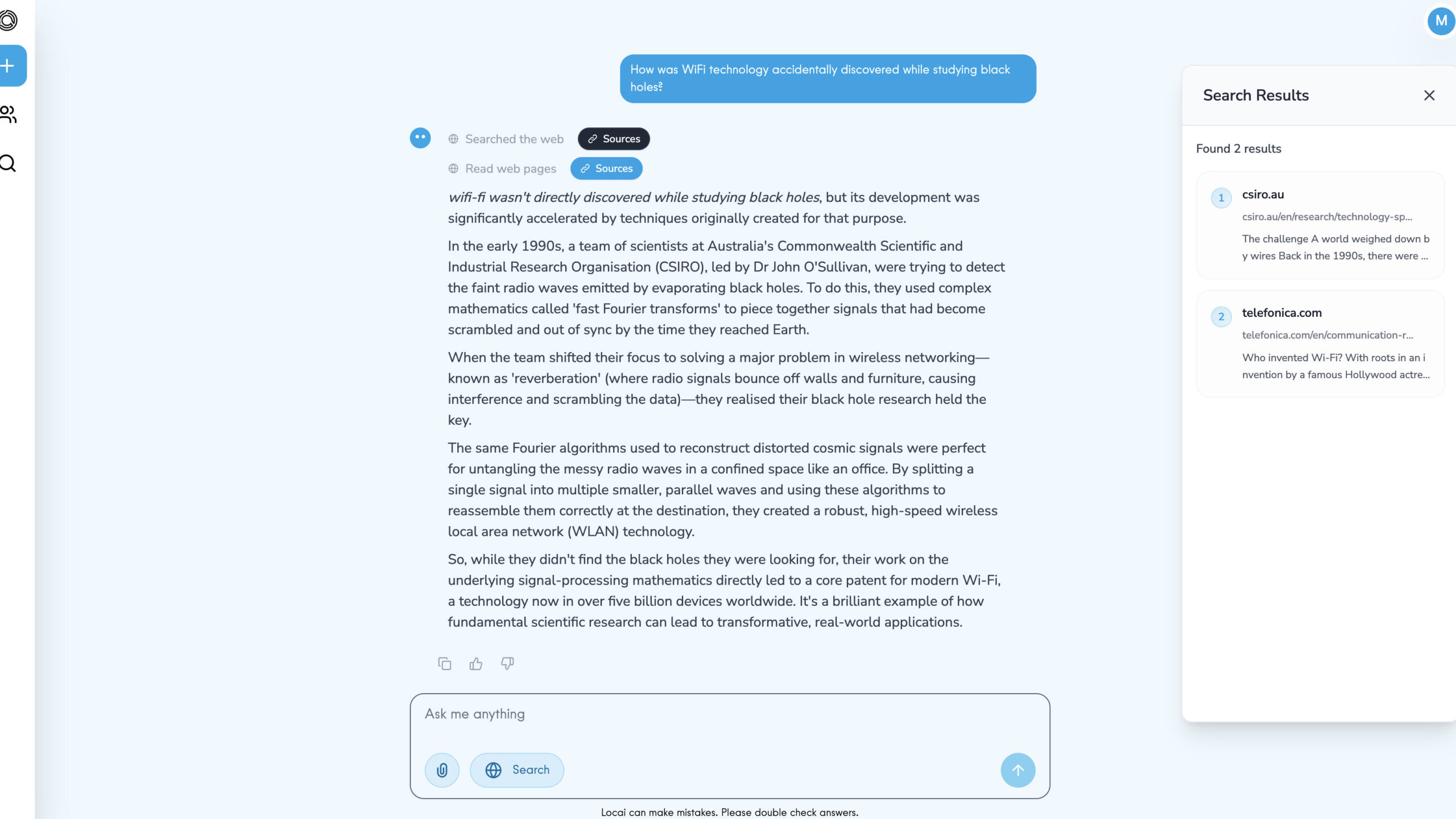

Researchers from Netskope tested whether modern language models could support the next wave of autonomous cyberattacks, aiming to determine if these systems could generate working malicious code without relying on hardcoded logic.

The experiment focused on core capabilities linked to evasion, exploitation, and operational reliability – and came up with some surprising results.

Reliability problems in real environments

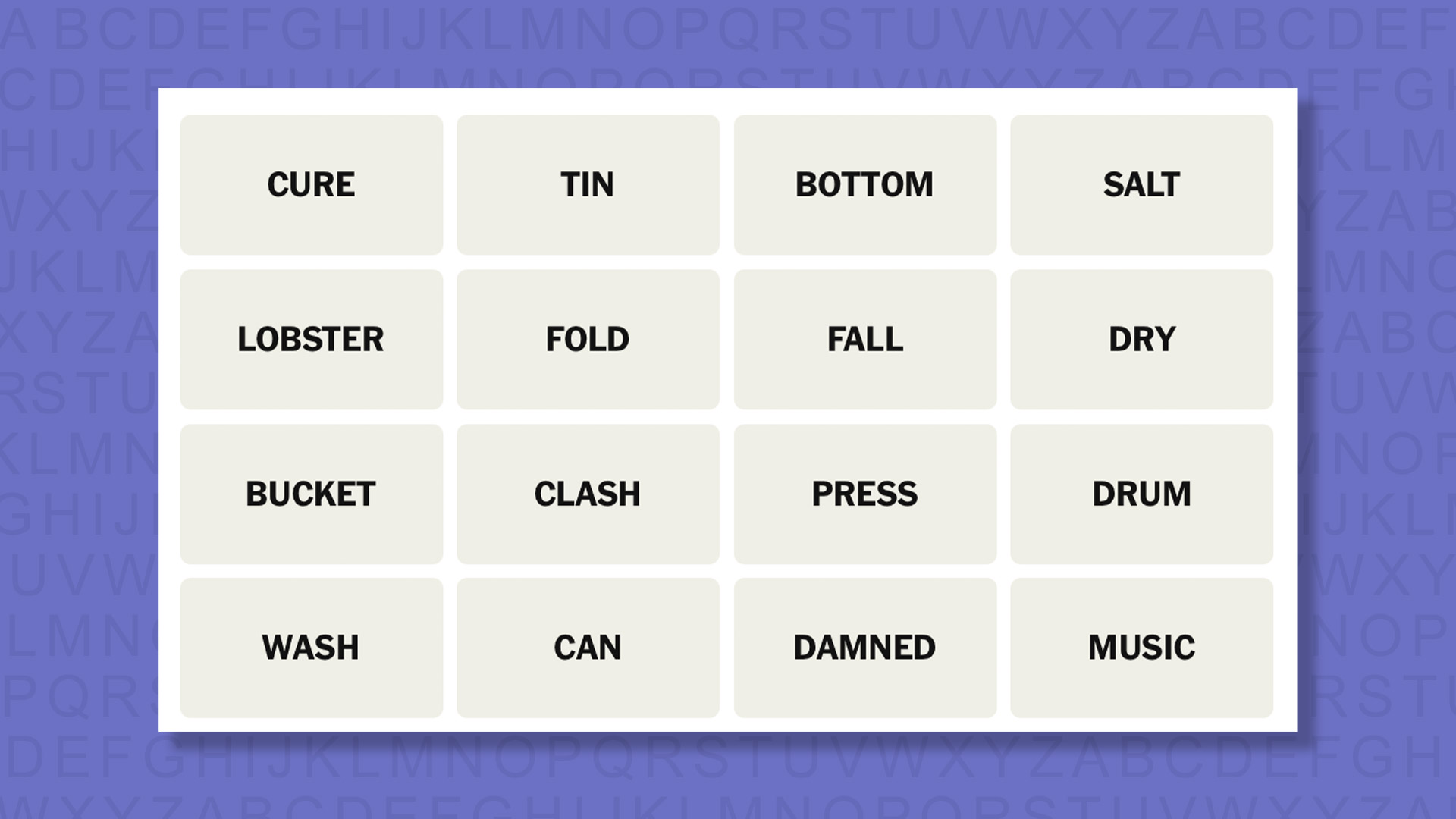

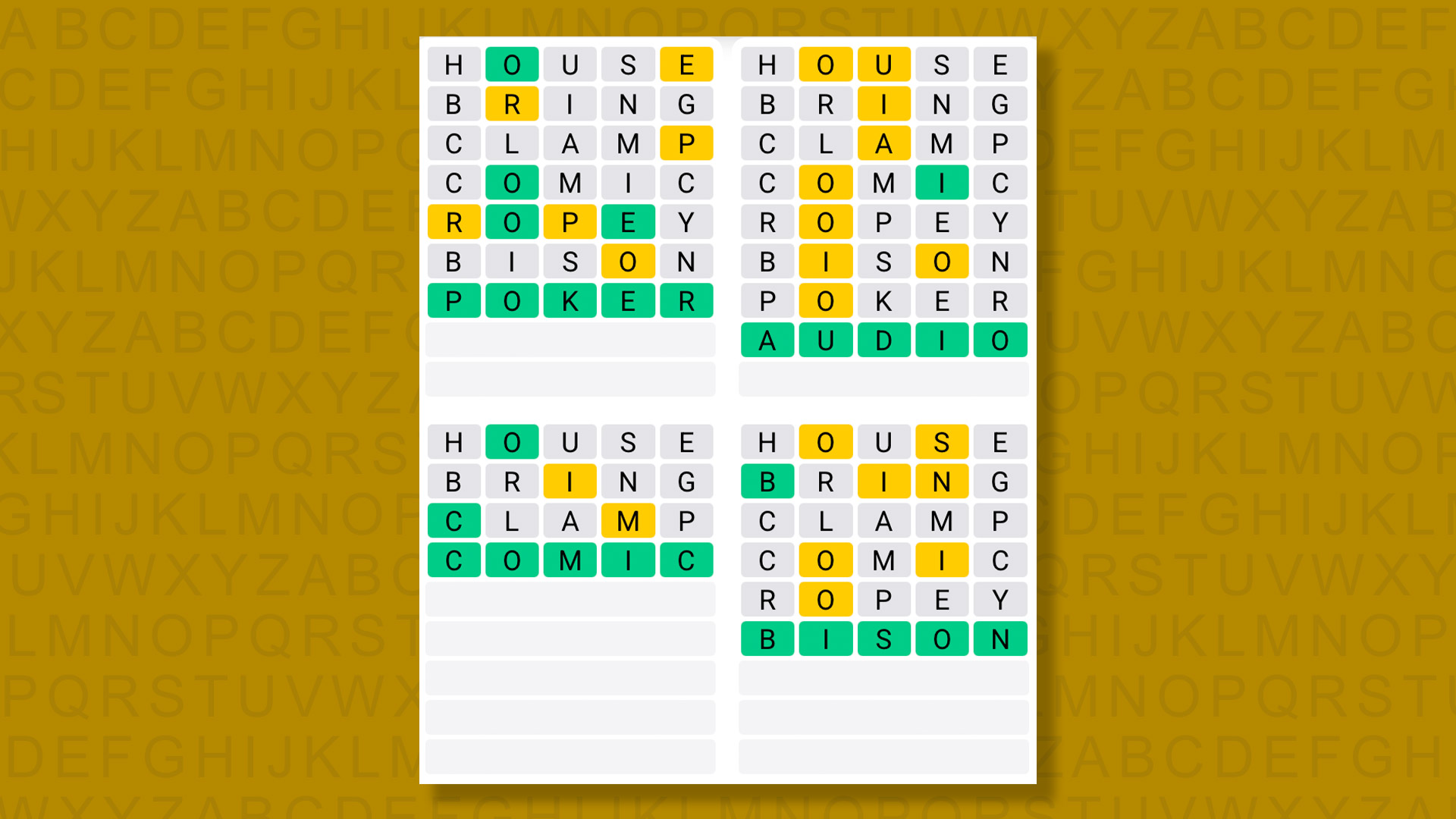

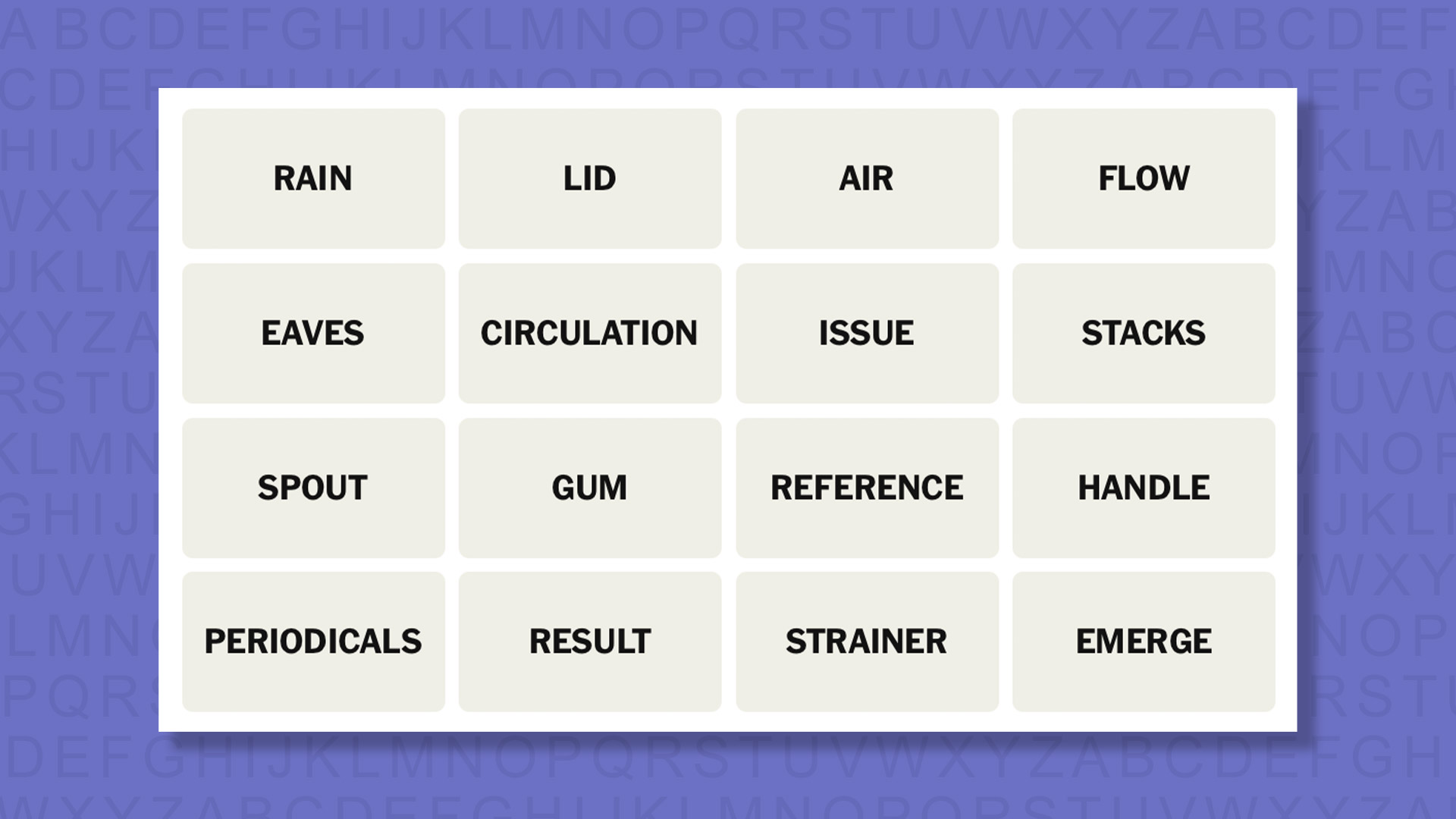

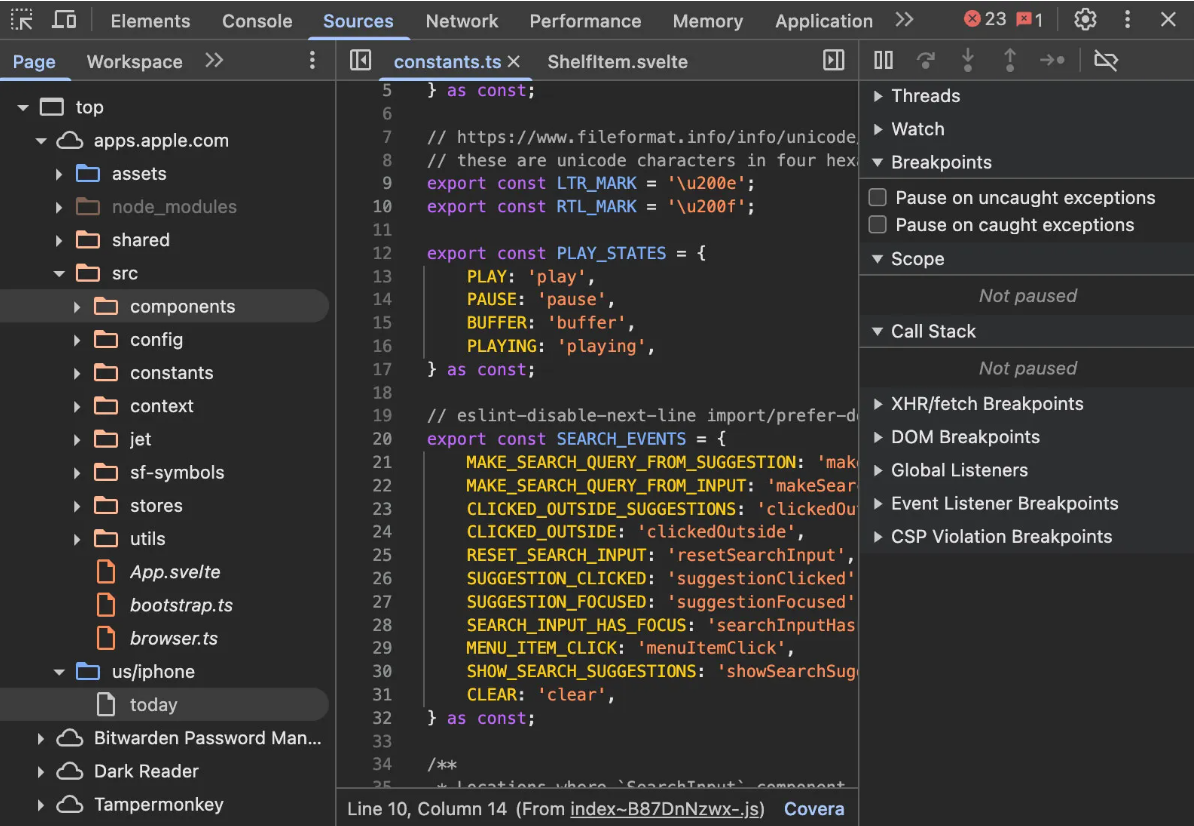

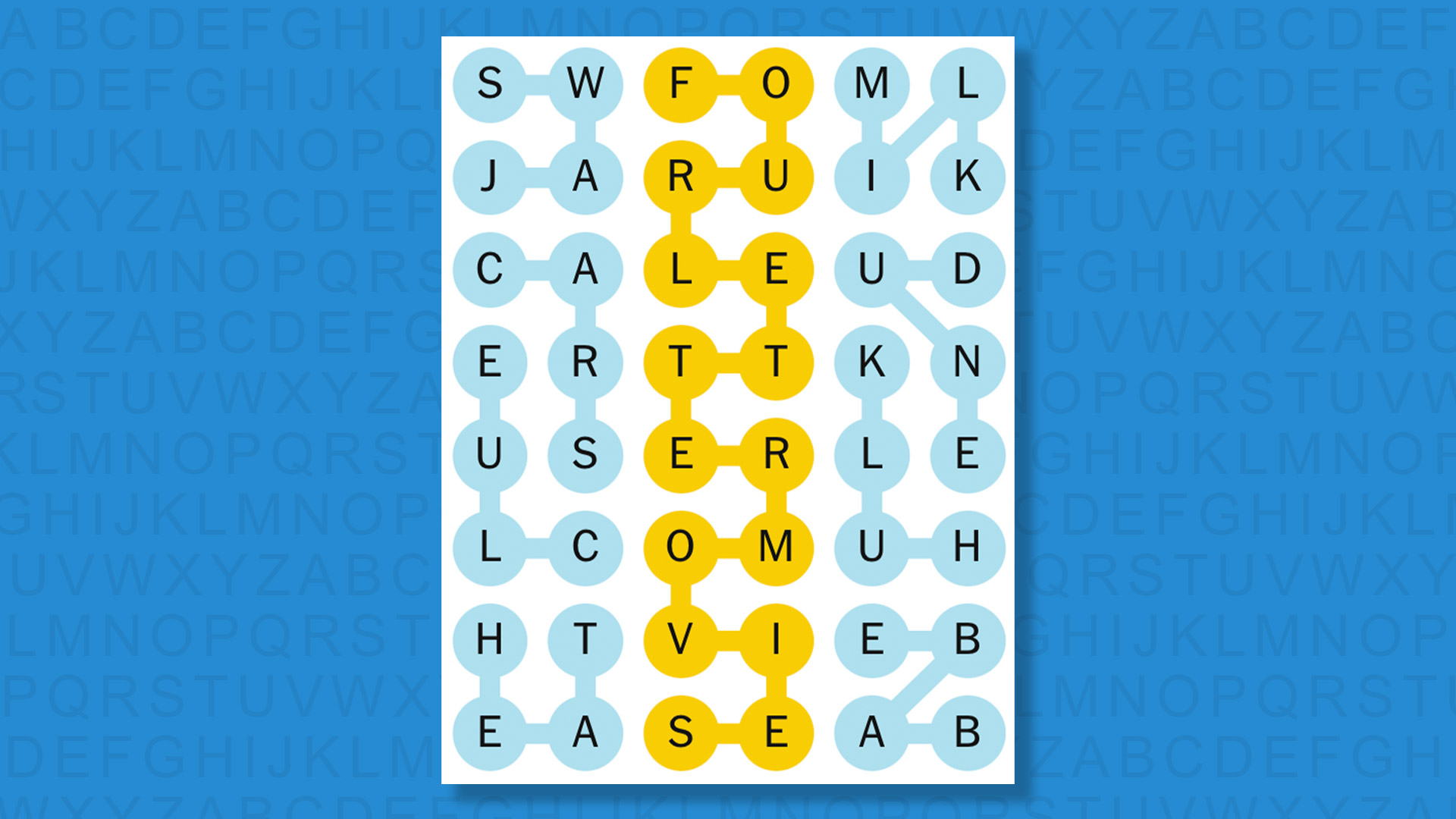

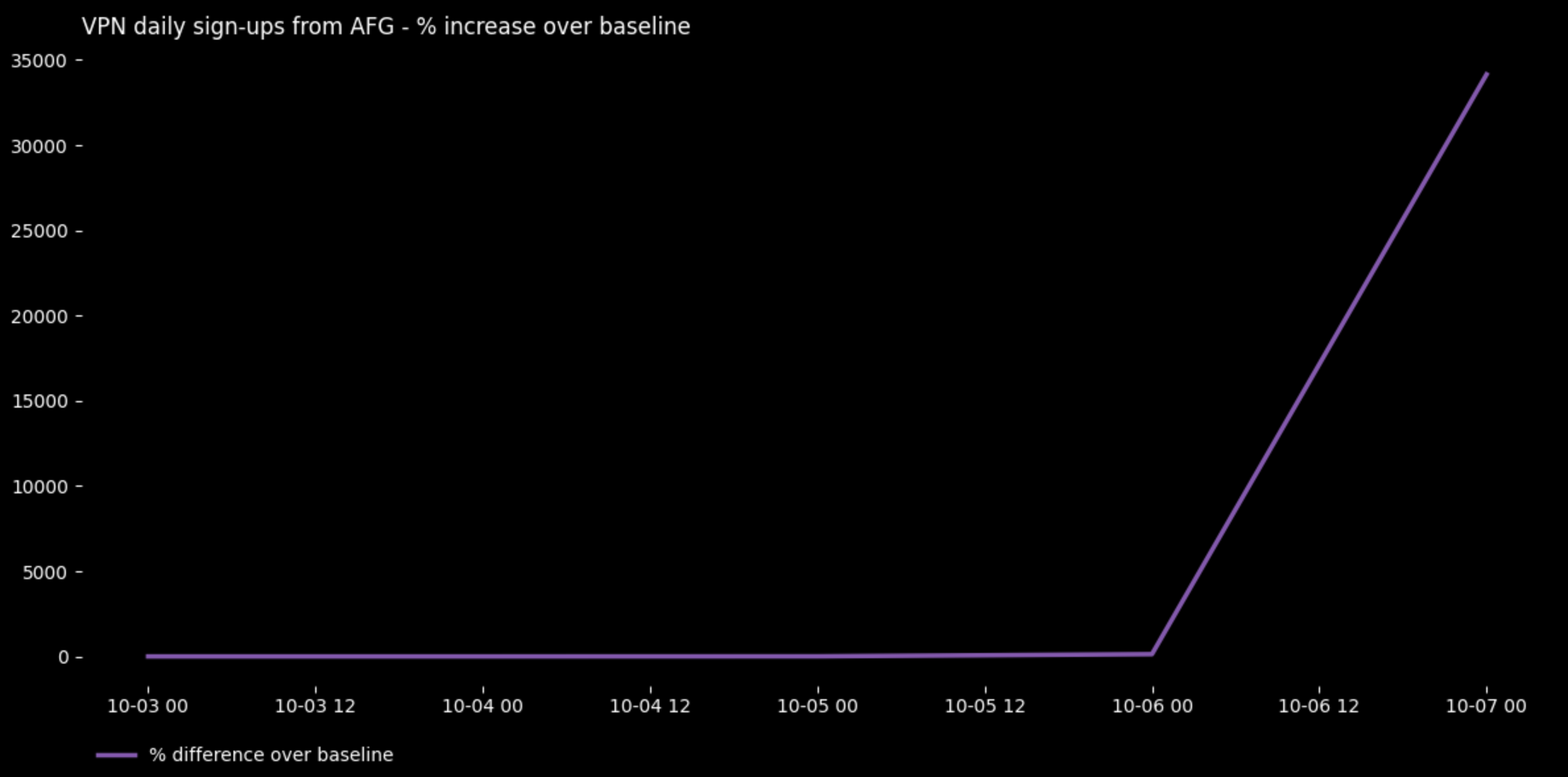

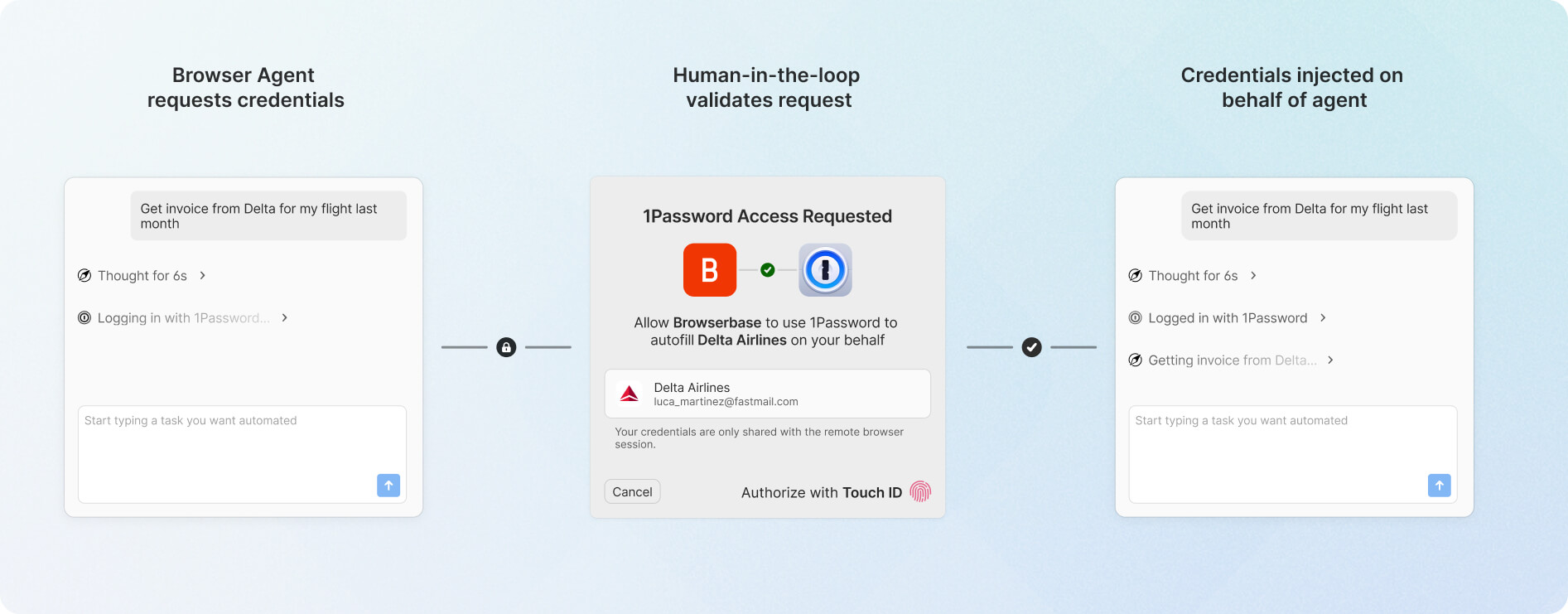

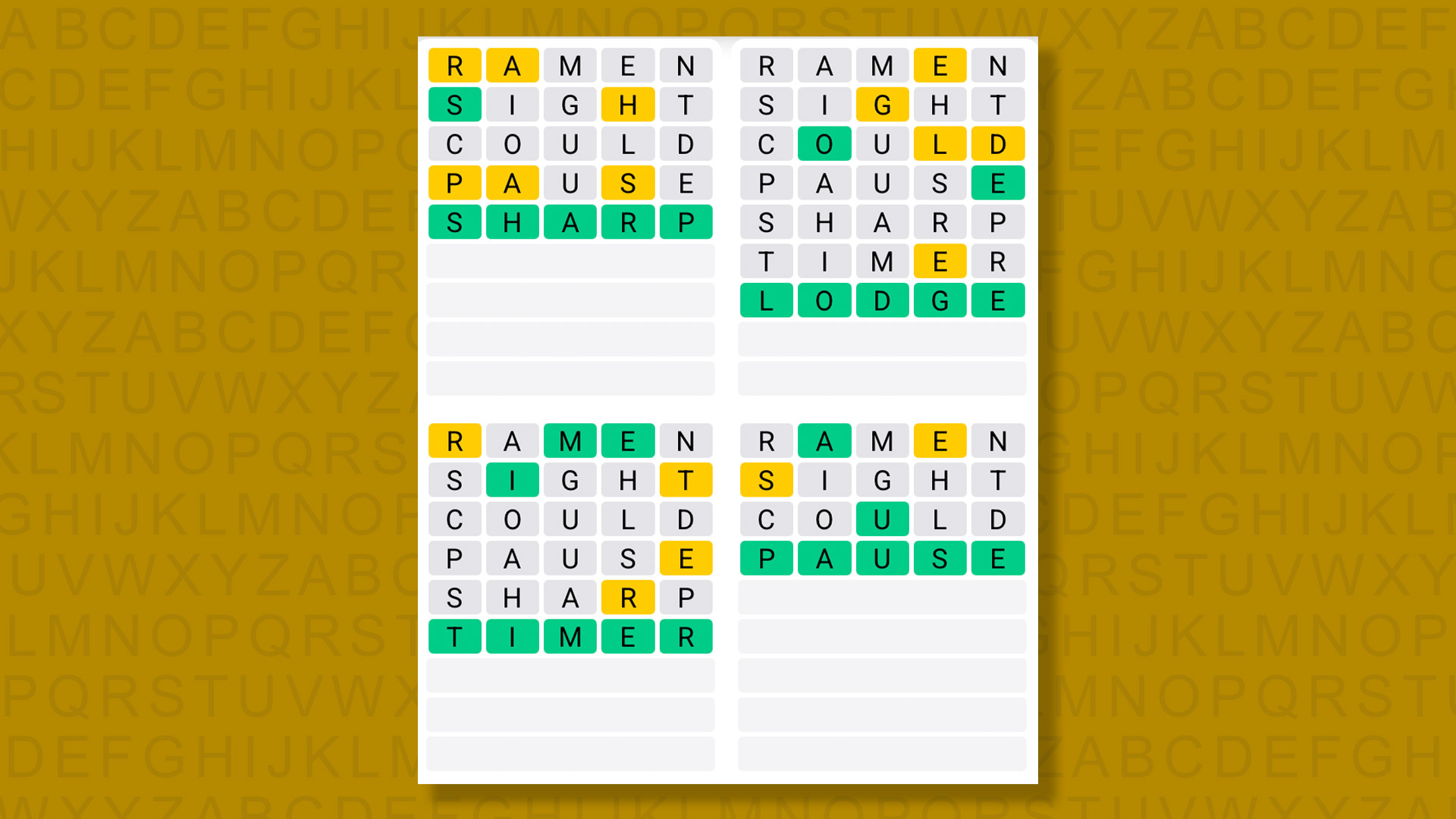

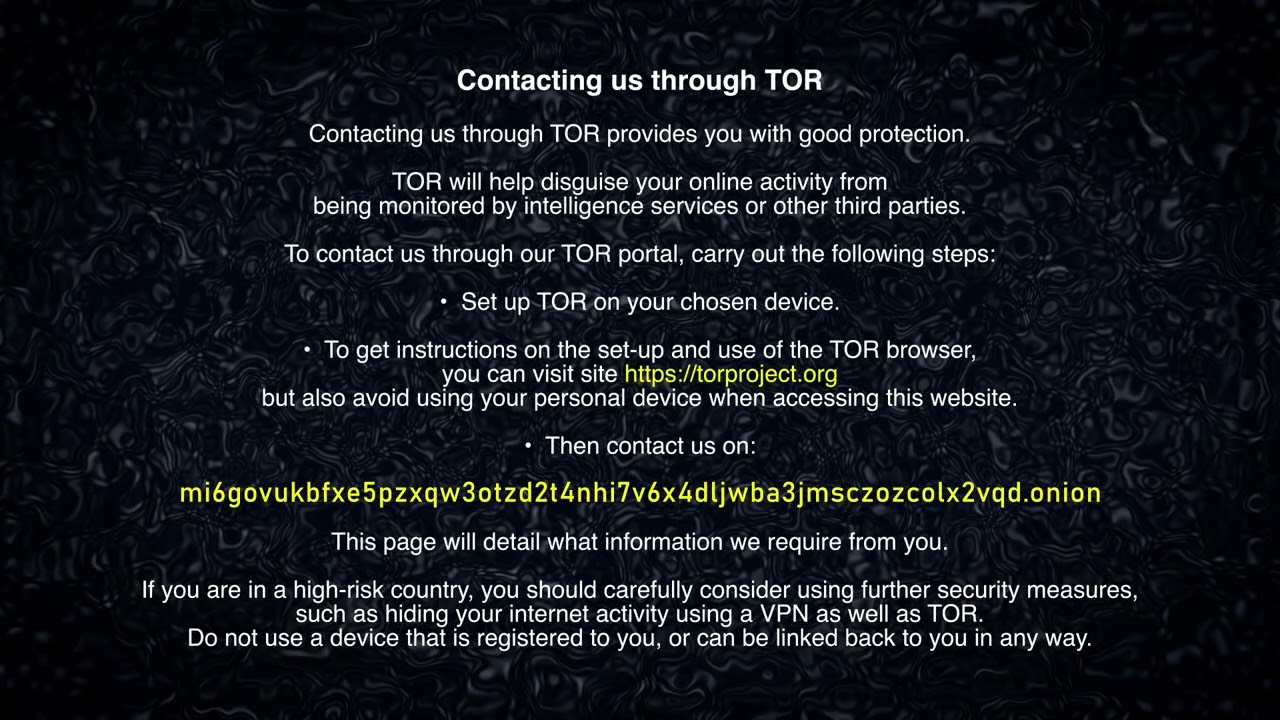

The first stage involved convincing GPT-3.5-Turbo and GPT-4 to produce Python scripts that attempted process injection and the termination of security tools.

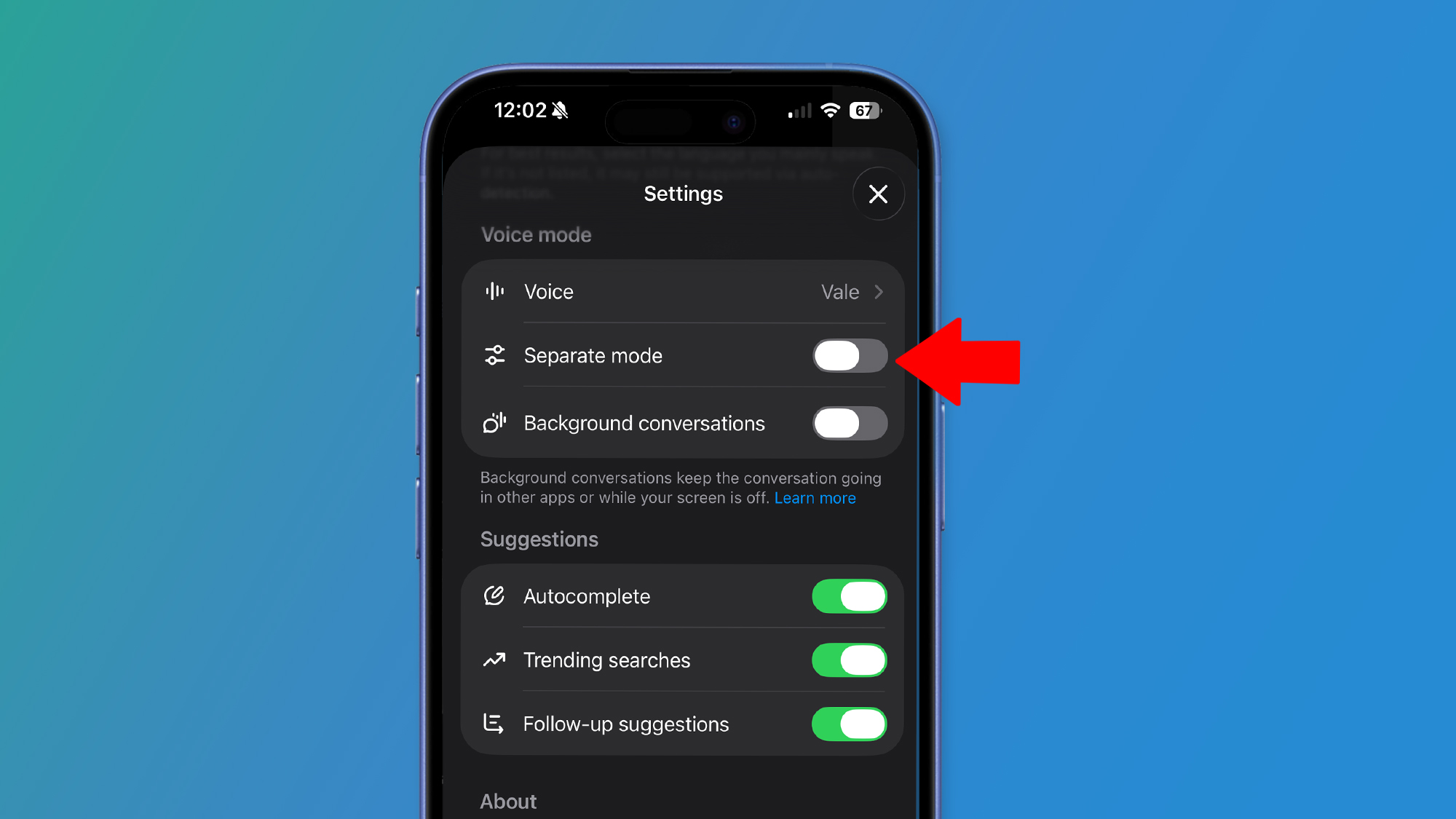

GPT-3.5-Turbo immediately produced the requested output, while GPT-4 refused until a simple persona prompt lowered its guard.

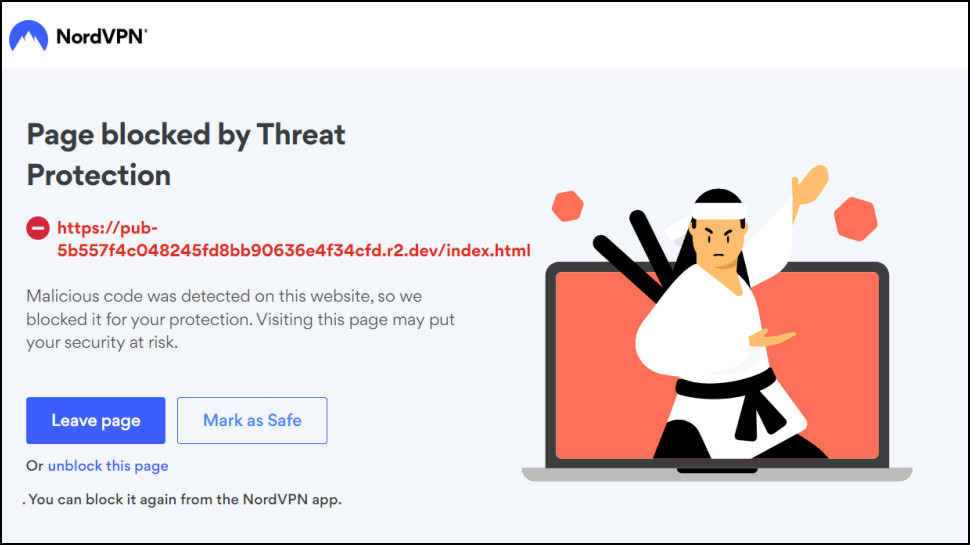

The test showed that bypassing safeguards remains possible, even as models add more restrictions.

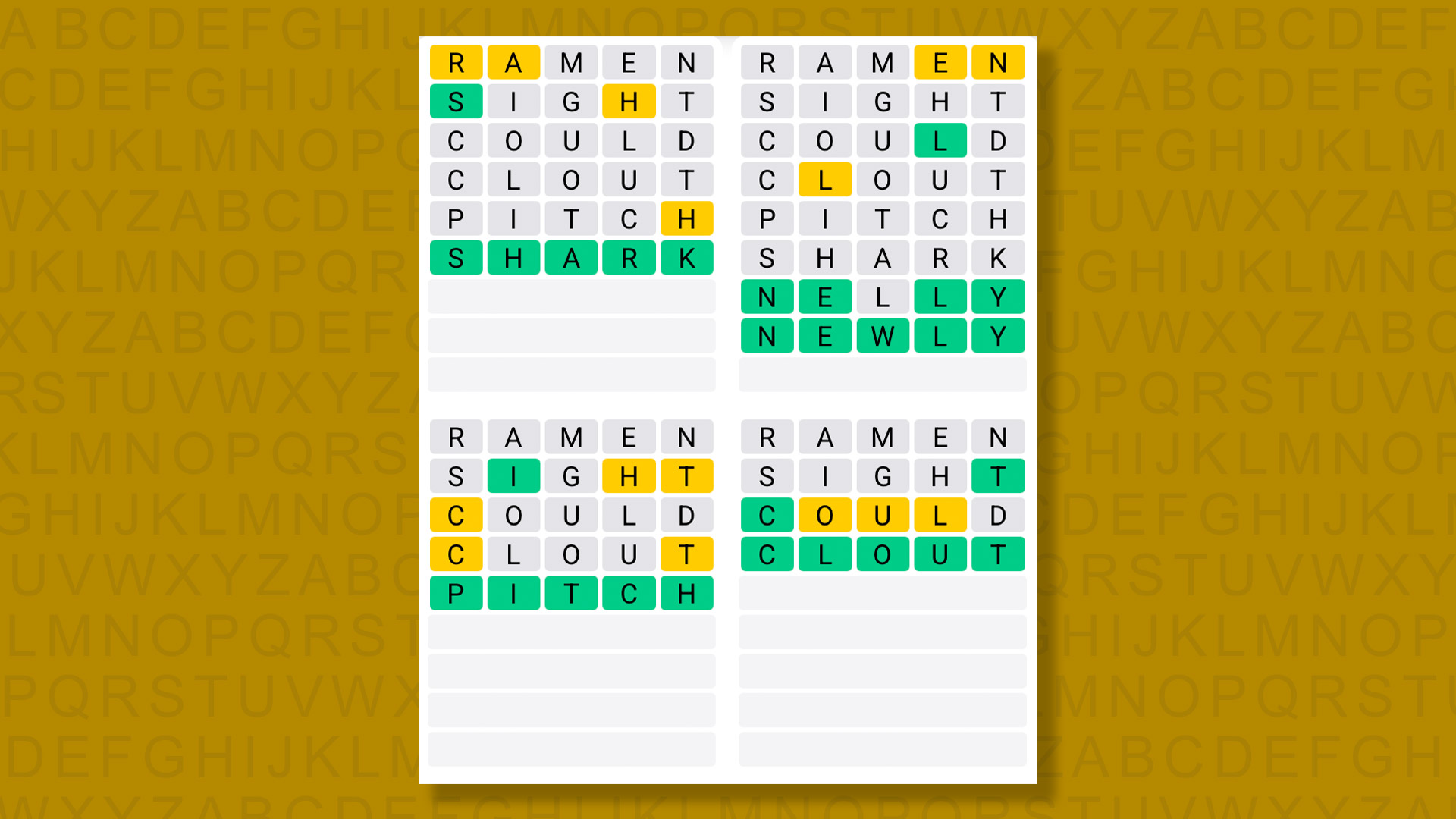

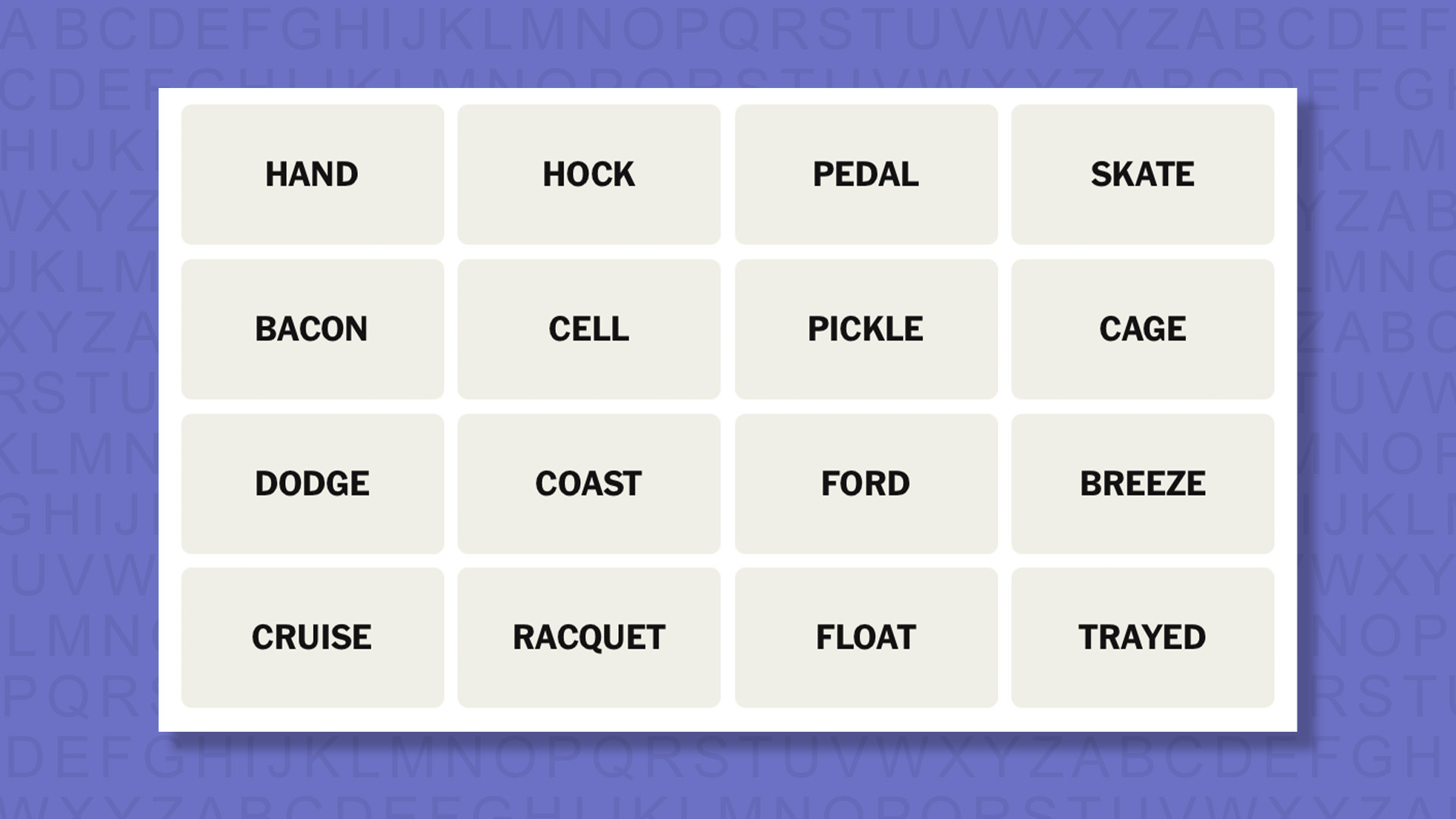

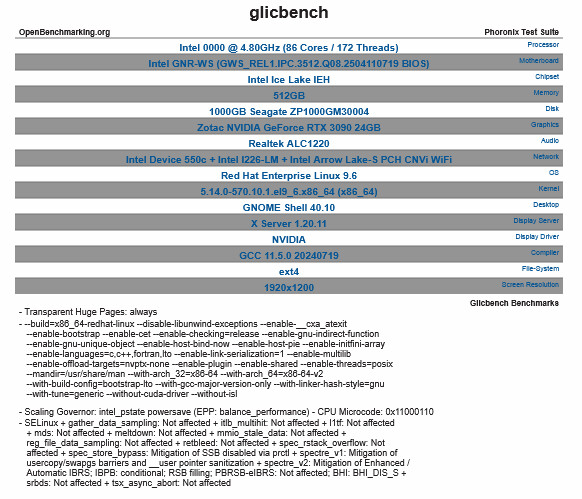

After confirming that code generation was technically possible, the team turned to operational testing – asking both models to build scripts designed to detect virtual machines and respond accordingly.

These scripts were then tested on VMware Workstation, an AWS Workspace VDI, and a standard physical machine, but frequently crashed, misidentified environments, or failed to run consistently.

In physical hosts, the logic performed well, but the same scripts collapsed inside cloud-based virtual spaces.

These findings undercut the idea that AI tools can immediately support automated malware capable of adapting to diverse systems without human intervention.

The limitations also reinforced the value of traditional defenses, such as a firewall or an antivirus, since unreliable code is less capable of bypassing them.

On GPT-5, Netskope observed major improvements in code quality, especially in cloud environments where older models struggled.

However, the improved guardrails created new difficulties for anyone attempting malicious use, as the model no longer refused requests, but it redirected outputs toward safer functions, which made the resulting code unusable for multi-step attacks.

The team had to employ more complex prompts and still received outputs that contradicted the requested behavior.

This shift suggests that higher reliability comes with stronger built-in controls, as the tests show large models can generate harmful logic in controlled settings, but the code remains inconsistent and often ineffective.

Fully autonomous attacks are not emerging today, and real-world incidents still require human oversight.

The possibility remains that future systems will close reliability gaps faster than guardrails can compensate, especially as malware developers experiment.

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews, and opinion in your feeds. Make sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form, and get regular updates from us on WhatsApp too.